NVIDIA TAO

Looking for a faster, easier way to create highly accurate, customized, and enterprise-ready AI models to power your vision AI applications? The open-source TAO for AI training and optimization delivers everything you need, putting the power of the world’s best Vision Transformers (ViTs) in the hands of every developer and service provider. You can now create state-of-the-art computer vision models and deploy them on any device—GPUs, CPUs, and MCUs—whether at the edge or in the cloud.

Download TAO Get Started

What Is the NVIDIA TAO?

Eliminate the need for mountains of data and an army of data scientists as you create AI/machine learning models and speed up the development process with transfer learning. This powerful technique instantly transfers learned features from an existing neural network model to a new customized one.

The open-source NVIDIA TAO, built on TensorFlow and PyTorch, uses the power of transfer learning while simultaneously simplifying the model training process and optimizing the model for inference throughput on practically any platform. The result is an ultra-streamlined workflow. Take one of the pretrained models, adapt them to your own real or synthetic data, then optimize for inference throughput. All without needing AI expertise or large training datasets.

Key Benefits

Train Models Efficiently

Use TAO’s AutoML capability to eliminate the need for manual tuning and get to your solutions faster.

Build Highly Accurate AI

Use SOTA Vision Transformer and NVIDIA pretrained models to create highly accurate and custom AI models for your use case.

Optimize for Inference

Go beyond customization and achieve up to 4X performance by optimizing the model for inference.

Deploy on Any Device

Deploy optimized models on GPUs, CPUs, MCUs, and more.

Faster Time-to-Market With NVIDIA NIM

NVIDIA NIM™ is a set of inference microservices that include industry standard APIs, domain-specific code, optimized inference engines, and enterprise runtime. These foundation models can be used as-is for inference using NVIDIA NIMs or fine-tuned for custom vision AI tasks.

- NV-CLIP is a commercial vision foundation model based on popular CLIP architecture, trained using self-supervised learning on almost 1B image-text pairs. This model features both a text and vision encoder for prompt-based inference.

- NV-DINOv2 is a commercial vision foundation model trained using self-supervised learning on almost 1B images. This model can be quickly fine-tuned for various vision AI tasks with only a handful of training data.

- GroundingDINO is a commercial vision model with both a text and vision encoder to enable zero-shot detection and segmentation.

NVIDIA TAO is available as a part of NVIDIA AI Enterprise, an enterprise-ready AI software platform to speed time to value while mitigating the potential risks of open-source software.

Vision AI API Catalog

Why It Matters to Your AI Development

Bring Customized Generative AI to Your Application

Generative AI is a transformative force that will change many industries. Driving this are foundation models that've been trained on a large corpus of text, image, sensor, and other data. Now with TAO, you can fine-tune and customize these foundation models and create domain-specific generative AI applications. TAO enables fine-tuning of multi-modal models, such as NV-DINOv2, NV-CLIP, Grounding-DINO, Mask-GroundingDINO, FoundationPose , and more.

TAO also enables integrations with several cloud and third-party MLOPs services to provide developers and enterprises with an optimized AI workflow.

Read the Blog

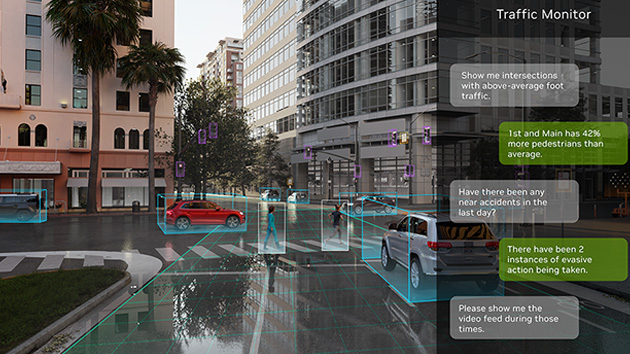

Auto-Labeling Using Text Prompts

New AI-assisted annotation capabilities give you a faster and less expensive way to auto-label object detection and segmentation masks. Developers can detect and segment any object without needing to train or fine-tune by just using text prompts and descriptors such as "red car" or "box on the conveyor belt." Developers can also fine-tune the model to improve accuracy on objects.

Watch the Video

Create Custom Multi-Modal Fusion Models

In many industries, AI systems rely on various sensors to perceive and interact with their environment. Each sensor type, such as cameras, LiDAR, or radar, provides unique information but also has inherent limitations.

Developers can now create custom multi-modal fusion models in TAO for detecting objects and creating 3D bounding boxes combining image (RGB) and LiDAR point cloud data. TAO offers the BEVFusion model that integrates data from multiple sensors, such as LiDAR and camera, into a unified bird's-eye view (BEV) representation.

Learn More

Deploy Models on Any Platform

NVIDIA TAO can help power AI across billions of devices. It supports model export in ONNX, an open format for better interoperability. This makes it possible to deploy a model trained with the NVIDIA TAO on any computing platform.

Learn More About the Integration with Mediatek Learn More About the Integration With STMicroelectronics Learn More About the Integration with ARM Ethos-U NPUs Learn More About the Integration With Edge Impulse Learn More About the Integration With Nota LaunchX Learn More About Integration With Arm CPU and NPU

Inference Performance

Unlock peak inference performance with NVIDIA pretrained models across platforms from the edge with NVIDIA Jetson™ solutions to the cloud featuring NVIDIA Ampere architecture GPUs. For more details on batch size and other models, check the detailed performance datasheet.

|

|

|

|

|

|

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Customer Stories

.jpg)

OneCup AI

OneCup AI’s computer vision system tracks and classifies animal activity using NVIDIA pretrained models and TAO, significantly reducing their development time from months to weeks.

Learn More

KoiReader

KoiReader developed an AI-powered machine vision solution using NVIDIA developer tools including TAO to help PepsiCo achieve precision and efficiency in dynamic distribution environments.

Read the Blog

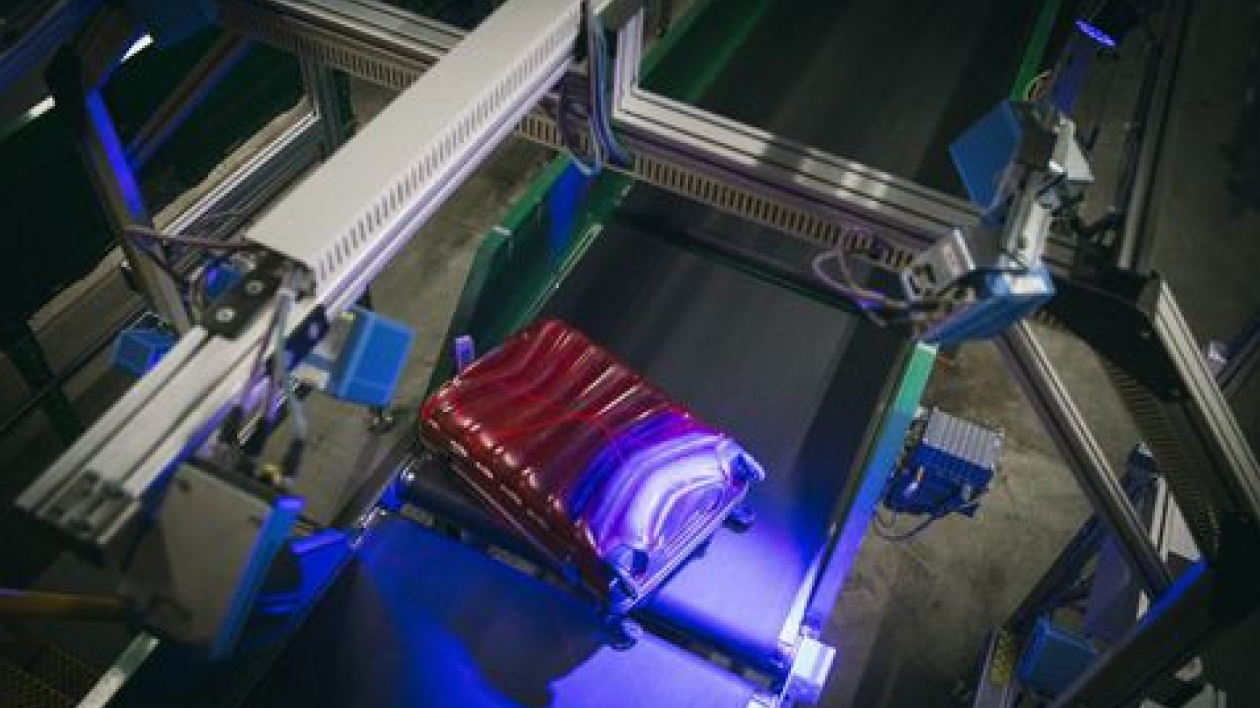

Trifork

Trifork jumpstarted their AI model development with NVIDIA pretrained models and TAO Toolkit to develop their AI-based baggage tracking solution for airports.

Learn More

Leading Adopters

General FAQ

You can find the full matrix of supported model architectures here.

- Access to exclusive commercial foundation models for vision AI

- Validation and integration for NVIDIA AI open-source software

- Access to AI solution workflows to speed time to production

- Certifications to deploy AI everywhere

- Enterprise-grade support, security, manageability, and API stability to mitigate potential risks of open source software

- Vision models can be deployed through DeepStream or NVIDIA Triton™.

- You can also deploy the models in ONNX format on any platform.

Refer to the documentation section for deployment details.

Resources

New Blog—TAO 5.5

The NVIDIA TAO version 5.5 brings new foundational models and training capabilities.

Read the Blog

Blog—Vision Transformers

Learn how to improve accuracy and robustness of vision AI apps with Vision Transformers (ViTs) and NVIDIA TAO

Read the Blog