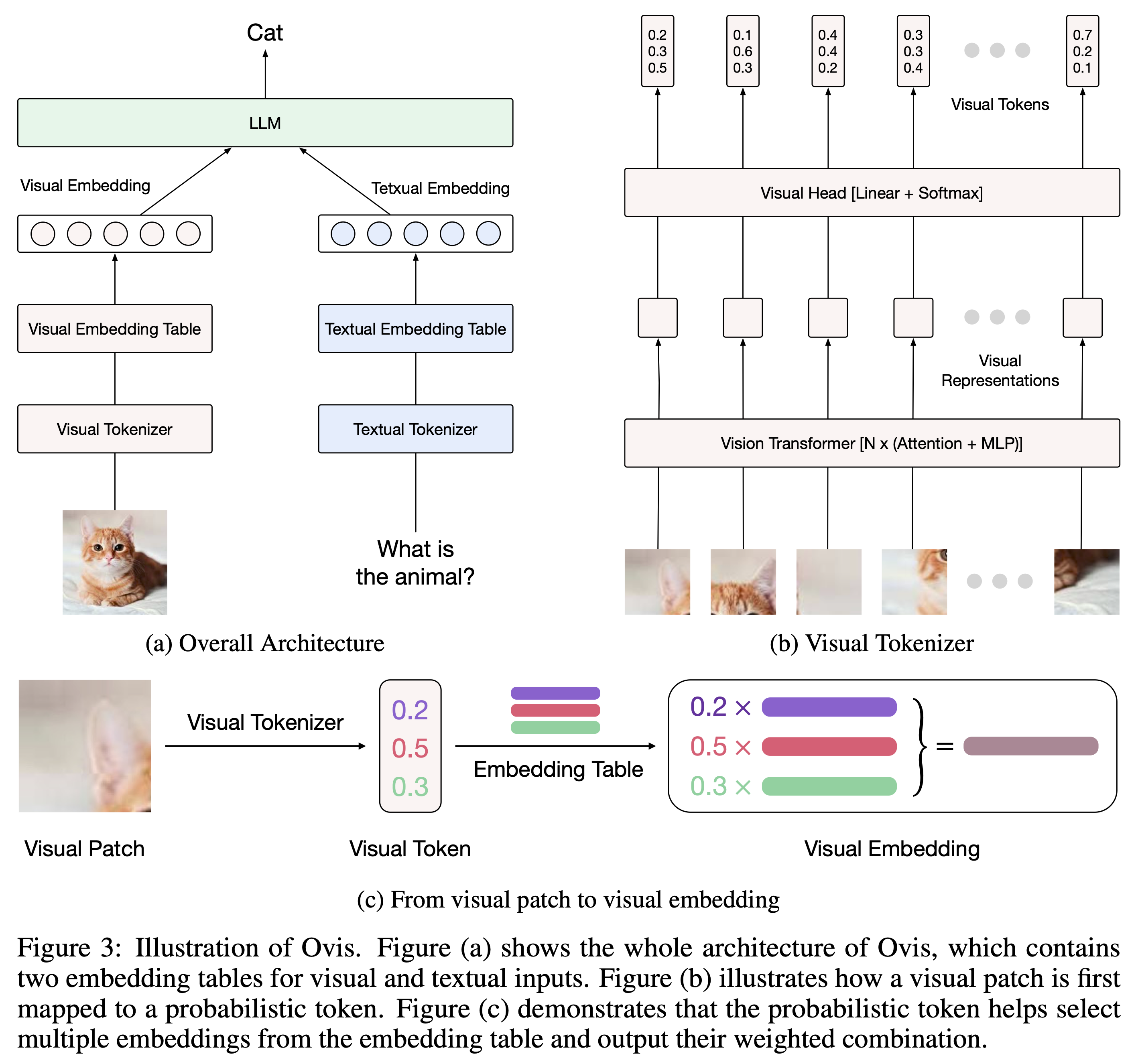

Ovis (Open VISion) is a novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings. For a comprehensive introduction, please refer to the Ovis paper.

- [09/19] 🔥 Announcing Ovis1.6 (Model, Demo)! This latest release further enhances high-resolution image processing, is trained on a larger, more diverse, and higher-quality dataset, and refines the training process with DPO training following instruction-tuning.

- [07/24] 🔥 Introducing Ovis1.5, featuring improved high-resolution image processing and optimized training data for enhanced performance.

- [06/14] 🔥 Launch of Ovis1.0, the inaugural version of the Ovis model.

Ovis has been tested with Python 3.10, Torch 2.2.0, Transformers 4.44.2, and DeepSpeed 0.14.4. For a comprehensive list of package dependencies, please consult the requirements.txt file. Before finetuning or inference, please install Ovis as follows.

git clone git@github.com:AIDC-AI/Ovis.git

conda create -n ovis python=3.10 -y

conda activate ovis

cd Ovis

pip install -r requirements.txt

pip install -e .Ovis can be instantiated with popular LLMs. We provide the following Ovis MLLMs:

| Ovis MLLMs | ViT | LLM | Model Weights |

|---|---|---|---|

| Ovis1.6-Gemma2-9B | Siglip-400M | Gemma2-9B-It | Huggingface |

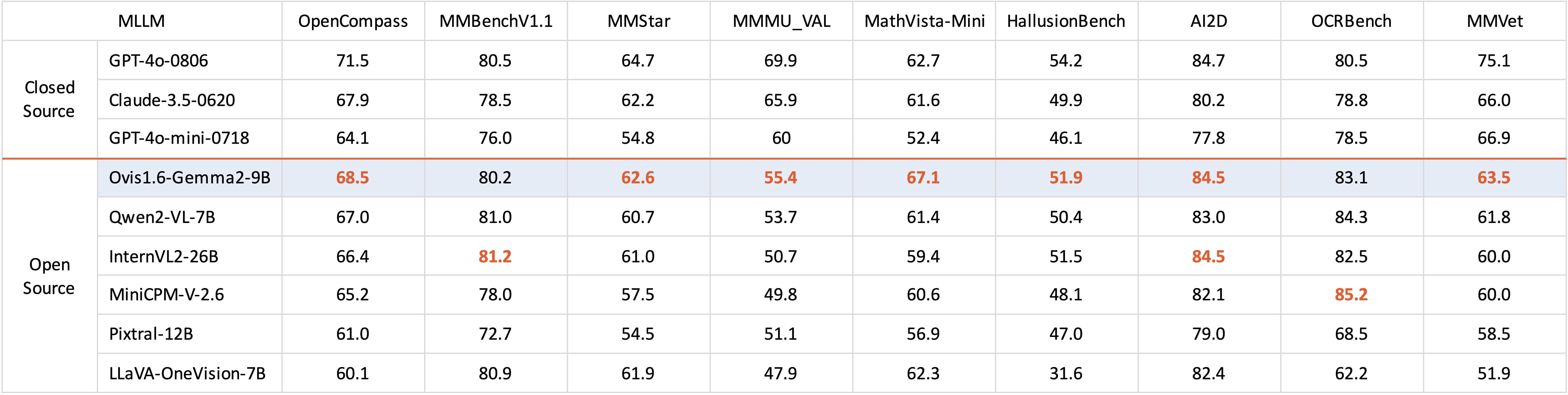

With just 10B parameters, Ovis1.6-Gemma2-9B leads the OpenCompass benchmark among open-source MLLMs within 30B parameters.

Coming soon

We provide an inference wrapper in ovis/serve/runner.py, which can be used as:

from PIL import Image

from ovis.serve.runner import RunnerArguments, OvisRunner

image = Image.open('IMAGE_PATH')

text = 'PROMPT'

runner_args = RunnerArguments(model_path='MODEL_PATH')

runner = OvisRunner(runner_args)

generation = runner.run([image, text])Based on Gradio, Ovis can also be accessed via a web user interface:

python ovis/serve/server.py --model_path MODEL_PATH --port PORTIf you find Ovis useful, please cite the paper

@article{lu2024ovis,

title={Ovis: Structural Embedding Alignment for Multimodal Large Language Model},

author={Shiyin Lu and Yang Li and Qing-Guo Chen and Zhao Xu and Weihua Luo and Kaifu Zhang and Han-Jia Ye},

year={2024},

journal={arXiv:2405.20797}

}

This work is a collaborative effort by the MarcoVL team. We would also like to provide links to the following MLLM papers from our team:

- Parrot: Multilingual Visual Instruction Tuning

- Wings: Learning Multimodal LLMs without Text-only Forgetting

The project is licensed under the Apache 2.0 License and is restricted to uses that comply with the license agreements of Gemma2 and Siglip.